Reliable data pipeline and warehouse.

Prefetched data for faster loads.

Supports Looker Studio, BigQuery and more.

Legacy Reportdash Platform.

Data fetched on demand.

Export to Google Sheets or Looker Studio.

The Data Sprawl Problem

Your business data is everywhere.

Customer interactions live in your CRM. Campaign performance is scattered across Google Ads, Meta, and LinkedIn. Website analytics sit in GA4. Finance numbers hide in Stripe or QuickBooks. Operations data? Yet another platform.

Each tool does its job well, but together they create data silos, fragments of information that don’t tell the whole story. The result?

Reports that don’t match across teams

Hours wasted stitching spreadsheets

Decisions made on partial or outdated data

In 2025, with companies running more SaaS apps than ever, the cost of fragmented data isn’t just inefficiency, it’s lost revenue, compliance risk, and missed growth opportunities.

That’s where data consolidation comes in. At its core, it’s the practice of pulling all your business data whether from marketing platforms, sales systems, or finance tools into one reliable source of truth.

This blog isn’t just another definition piece. We’ll explore:

What data consolidation really means (without jargon)

Why it’s become critical now

Methods and challenges (and how to overcome them)

Best practices and real-world examples

How marketers and business leaders can unlock faster, smarter decisions

By the end, you’ll have a roadmap to go from data chaos → clarity → confident growth.

What is Data Consolidation?

At its simplest, data consolidation is the process of combining information from multiple sources into a single, unified view.

Think of it like running a company with 10 branch offices. Each office keeps its own records sales numbers, customer details, expenses. If leadership wants to understand performance, they can either:

Call each office, wait for reports, and manually stitch them together (slow, error-prone).

Or, bring all records into one central system that updates automatically (fast, reliable).

That second option is data consolidation.

It’s different from related terms you might hear:

Data integration → focuses on connecting systems so they can “talk” to each other.

Data migration → moving data from one system to another, usually once.

Data consolidation → continuously combines data across systems into a single source of truth.

Left side: Icons of disconnected apps (CRM, Google Ads, Stripe, GA4, etc.) with arrows pointing everywhere.

Right side: Same apps feeding into one clean dashboard labeled“Unified Data Hub.”

Why it matters: Consolidation doesn’t just tidy up. It creates a foundation foraccurate reporting, advanced analytics, AI adoption, and better decision-making—because everyone works from the same trusted data.

Why Data Consolidation Matters in 2025

The idea of centralising data isn’t new. What’s different in 2025 is the scale, speed, and stakes.

1. The Scale of SaaS Adoption

The average mid-sized company now runs 100+ SaaS apps, each producing critical data. Without consolidation, insights stay locked in silos, and leadership flies blind.

2. The Speed of Decision-Making

Markets shift daily. Real-time marketing optimization, instant fraud detection, or dynamic pricing all depend on consolidated data streams. In a world where speed is strategy, disjointed reporting just can’t keep up.

3. The Stakes of Compliance & Trust

Regulations like GDPR and CCPA demand consistent, auditable data flows. Errors in reconciliation can lead to fines, lost trust, and reputational risk, issues too costly to ignore.

Role-Specific Impact

Marketers → Unified data unlocks accurate attribution and AI-driven campaign optimization.

Finance → Consolidation ensures real-time revenue visibility and audit-ready compliance.

Operations → Integrated supply and demand data improves forecasting and resource allocation.

Executives → One trusted view enables faster pivots in strategy and investment.

Consolidation isn’t just about tidying up data pipelines anymore. It’s about making sure decisions are powered by accurate, real-time insights while staying compliant and competitive.

Methods of Data Consolidation: Which One Fits You?

There isn’t a one-size-fits-all approach to data consolidation. The right method depends on how fast you need insights, the complexity of your data, and your team’s technical maturity.

Let’s break down the most common methods, plus where they fit best.

1. ETL (Extract, Transform, Load)

How it works: Data is pulled from sources, cleaned/transformed, then loaded into a warehouse.

Best for: Companies that need curated, standardized data for reporting.

Watch out for: Longer processing times; not ideal for real-time needs.

2. ELT (Extract, Load, Transform)

How it works: Raw data goes into a warehouse/lake first, then transformations happen inside.

Best for: Cloud-first companies leveraging scalable warehouses (Snowflake, BigQuery, Redshift).

Watch out for: Requires strong governance to avoid “data swamp” issues.

3. Data Warehousing

How it works: Centralized storage for structured, cleaned data.

Best for: Analytics-heavy organizations needing consistent reporting.

Watch out for: Can be costly and rigid if business needs shift often.

4. Data Lakes & Lakehouses

How it works: Store raw data (structured + unstructured) at scale; lakehouse blends governance + analytics.

Best for: Enterprises handling diverse data types (clickstreams, logs, IoT).

Watch out for: Without proper management, can become unsearchable “data swamps.”

5. Data Virtualization

How it works: Creates a unified view of data across systems without physically moving it.

Best for: Fast queries across multiple sources, without heavy pipelines.

Watch out for: Performance bottlenecks if underlying systems are slow.

6. API-Driven & No-Code Consolidation (Modern Trend)

How it works: SaaS tools and connectors (e.g., Zapier, Fivetran, ReportDash DataStore) sync data without heavy coding.

Best for: Teams wanting speed, automation, and lower engineering overhead.

Watch out for: Can hit limits with very large or complex datasets.

7. AI-Assisted Consolidation (Emerging in 2025)

How it works: AI automates schema mapping, detects anomalies, and suggests transformations.

Best for: Businesses scaling fast, needing smarter pipelines with less manual effort.

Watch out for: Still maturing; requires oversight to avoid “black box” errors.

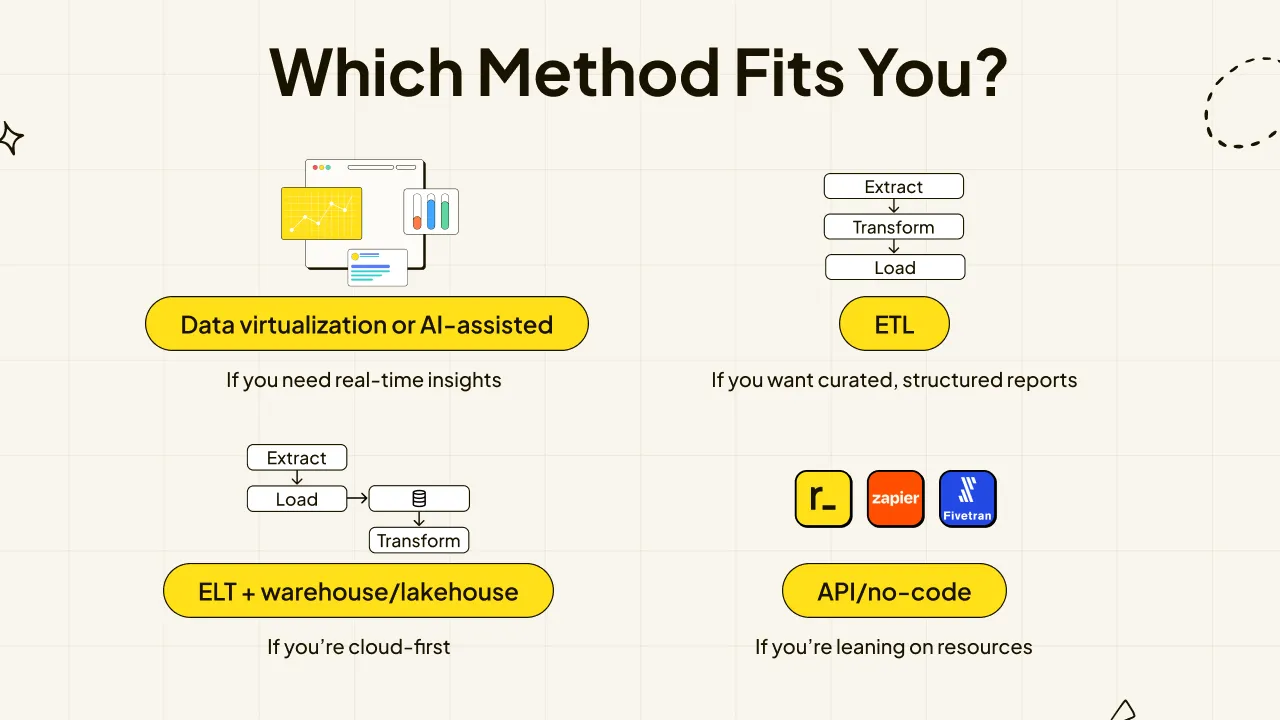

“Which Method Fits You?”

If you need real-time insights → Data virtualization or AI-assisted.

If you want curated, structured reports → ETL.

If you’re cloud-first → ELT + warehouse/lakehouse.

If you’re lean on resources → API/no-code.

Common Challenges (and How to Fix Them)

Even with the best intentions, most data consolidation projects hit roadblocks. Here are the most common challenges and how to get past them.

1. Data Silos Persist

Problem: Marketing, sales, and product teams each use different tools that don’t talk to each other.

Why it matters: Leads to duplicated work, inconsistent numbers, and blind spots in customer journeys.

Fix: Adopt connectors/APIs or no-code integration platforms to unify data sources. Start small (e.g., CRM + ad platforms) before expanding.

2. Poor Data Quality

Problem: Inconsistent naming conventions, missing fields, and duplicate entries.

Why it matters: “Garbage in, garbage out”—your dashboards can’t be trusted.

Fix: Implement automated data cleaning rules during ingestion. Use validation checks to flag anomalies before data reaches reports.

3. High Costs & Complexity

Problem: Enterprise warehouses or lakes can be expensive to build and maintain.

Why it matters: Teams end up stuck with half-finished projects or bloated infrastructure.

Fix: Start with scalable, pay-as-you-go cloud solutions. For lean teams, consider no-code or hybrid approaches to balance cost vs. control.

4. Lack of Real-Time Insights

Problem: ETL pipelines take hours (or days) to refresh.

Why it matters: Decisions get delayed, especially in fast-moving campaigns.

Fix: Use ELT or data virtualization for near-real-time access. Reserve ETL for high-value curated data.

5. Governance & Compliance Risks

Problem: Sensitive data (PII, financials) is pulled without proper access controls.

Why it matters: Risk of regulatory fines and broken customer trust.

Fix: Define clear data ownership, role-based access, and automated audit trails. Integrate compliance checks into your pipelines.

6. Overwhelmed Teams

Problem: Business users get lost in technical jargon or complex tools.

Why it matters: Consolidation efforts stall because adoption is low.

Fix: Choose user-friendly platforms. Layer in AI-driven insights or prebuilt templates so marketers and execs can actually use the data.

By tackling these challenges head-on with the right mix of tools and processes, businesses can turn data consolidation from a headache into a growth driver.

Best Practices for a Successful Data Consolidation Strategy

Consolidating data from multiple sources is no small task. Large volumes of raw data, fragmented systems, and different data formats often slow down the process. To build a reliable and scalable foundation, here are the best practices every business should follow.

1. Identify and Prioritize Data Sources

Not all data is equally valuable. Begin by mapping your existing data assets—CRMs, ERPs, marketing platforms, financial systems—and identify data sources that have the biggest impact on business operations. This step helps avoid wasting time on irrelevant or duplicate data.

2. Standardize Data Formats Early

Data from multiple sources often comes in different structures, file types, or naming conventions. Standardizing data formats during the consolidation process improves data consistency, reduces errors, and ensures smooth flow into a centralized repository such as a data warehouse or data lake.

3. Maintain Data Quality Standards

Consolidated data is only as strong as its weakest link. Establish clear data quality standards to detect missing data, replicate data errors, or inconsistencies across systems. Automated data pipelines with built-in validation rules help improve data quality without manual intervention.

4. Choose the Right Data Consolidation Tools

The right technology can make or break your data consolidation efforts. For small teams, no-code data consolidation tools can simplify the process of extracting data, transforming it, and loading it into a target system. Larger organizations may need ETL tools, cloud data warehouses, or data virtualization to support complex queries and large and complex datasets.

5. Strengthen Data Governance & Security

Data governance isn’t just about access—it’s about accountability. Implement role-based access controls, audit trails, and compliance checks to ensure data security and regulatory compliance. This protects sensitive customer data while enabling safe data retrieval and analysis.

6. Build Scalable Data Pipelines

As data volumes grow, your infrastructure must scale with it. Automate data flow using modern pipelines that can extract data from multiple sources, transform data into usable formats, and load data efficiently into a central repository. This ensures consolidated data remains accurate, timely, and accessible.

7. Empower Data-Driven Decision Making

Consolidation isn’t the end goal, it’s what you do with all this data that matters. Equip business users, marketers, and data engineers with business intelligence dashboards that make data analysis simple. Effective data consolidation enables businesses to gain valuable insights, improve customer experiences, and make confident, data-driven decisions.

Pair your consolidation strategy with master data management practices. This ensures data integrity across teams, reduces duplicate records, and strengthens trust in your centralized data hub.

Real-World Case Studies

Data consolidation enables businesses to turn fragmented data from multiple sources into valuable insights that drive decisions. Here are a few examples from different industries:

1. Marketing Agency: Unified Ad & Analytics Data

Challenge: Multiple advertising platforms (Google Ads, Meta, LinkedIn) and GA4 data created fragmented reports.

Solution: Using ETL pipelines and a cloud data warehouse, the agency consolidated data from multiple sources into a centralized repository.

Outcome: Campaign reporting time reduced by 70%, enabling faster data-driven decision making and better ROI optimization.

2. Retailer: Customer 360 View

Challenge: Customer data scattered across POS systems, eCommerce platforms, and loyalty programs.

Solution: Integrated data pipelines, data virtualization, and master data management combined all customer records into a single view.

Outcome: Improved data quality and data consistency, enabling personalized marketing campaigns and accurate inventory forecasting.

3. SaaS Company: Product, Billing & Support Integration

Challenge: Sales, subscription billing, and support ticket data existed in separate systems, making churn analysis difficult.

Solution: Consolidated data from multiple sources into a cloud data warehouse using ETL tools and automated data pipelines.

Outcome: Reduced churn by 20%, improved business operations, and enabled executive teams to access centralized, reliable data for strategic decisions.

Effective data consolidation projects always begin by identifying key data sources and aligning them with clear business goals. Maintaining data quality and strong data governance throughout the consolidation process is essential to prevent errors, inconsistencies, and fragmented data. When done correctly, consolidated data empowers teams across the organization to make faster, more confident, and truly data-driven decisions.

The Future of Data Consolidation

The landscape of data consolidation is evolving rapidly. Businesses no longer just need consolidated data for reporting—they require real-time, actionable insights to stay competitive. Here’s what the future holds:

1. AI-Assisted Data Consolidation

Artificial intelligence is transforming the data consolidation process. AI can automate schema mapping, detect anomalies, and even suggest transformations for large and complex datasets. This reduces manual work, improves data quality, and ensures data consistency across multiple sources.

2. Real-Time Data Pipelines

Traditional ETL or ELT pipelines often operate in batches, creating delays between data retrieval and data analysis. Modern techniques like streaming pipelines and data virtualization allow businesses to integrate data from multiple sources in near real-time. This enables faster data-driven decision making and more agile business operations.

3. Composable & Cloud-Native Architectures

Cloud data warehouses, data lakes, and hybrid solutions provide scalable infrastructure for consolidating large volumes of structured and unstructured data. Composable platforms allow teams to plug in new data sources, apply transformations, and replicate data across systems without rebuilding the entire architecture.

4. Enhanced Data Governance & Security

As regulations tighten, data governance and access controls will become central to every consolidation project. Future consolidation processes will prioritize data security, regulatory compliance, and maintaining data integrity, while ensuring teams can still access relevant data efficiently.

5. Predictive Analytics & Business Intelligence

With consolidated data in a centralized repository, businesses can move beyond reporting into predictive and prescriptive analytics. This enables valuable insights for marketing, finance, and operations, turning raw data into actionable intelligence that drives growth.

The next wave of data consolidation is about automation, real-time access, and AI-driven insights. Organizations that adopt these trends will not only improve their data quality and data management, but also gain a competitive edge by leveraging consolidated data to make faster, smarter, and more confident decisions.

How to Get Started: Your Data Consolidation Roadmap

Implementing effective data consolidation doesn’t have to be overwhelming. By following a structured roadmap, businesses can consolidate data from multiple sources, improve data quality, and unlock valuable insights efficiently.

Step 1: Audit Your Current Data Landscape

Identify all data sources, existing data assets, and the data structure in each system. Assess the quality of your raw data, note any missing data, and document how data flows across the organization. This forms the foundation for a successful consolidation process.

Step 2: Define Your Business Goals

Clarify what you want to achieve with consolidated data:

Faster reporting for marketing campaigns

Unified customer data for personalization

Improved business intelligence dashboards

Compliance with regulatory standards

By linking the data consolidation process to clear business outcomes, you ensure efforts focus on relevant data that drives decision-making.

Step 3: Choose the Right Data Consolidation Tools & Techniques

Select data consolidation tools and techniques that match your goals and team capabilities:

ETL or ELT pipelines for structured reporting

Data virtualization for real-time access

Cloud data warehouses or data lakes for large and complex datasets

No-code or AI-assisted tools to improve data accessibility and reduce manual effort

Step 4: Standardize and Transform Data

Apply data quality standards, standardizing data formats, and transforming raw data into relevant data ready for analysis. Consolidated data should be consistent, accurate, and stored in a centralized repository that supports complex queries.

Step 5: Implement Governance and Security Measures

Establish data governance policies and access controls to protect sensitive information, maintain data integrity, and ensure regulatory compliance. This step is critical for sustainable data consolidation projects.

Step 6: Pilot, Measure, and Scale

Start with a small, high-impact consolidation workflow. Monitor the results, measure improvements in data consistency, data quality, and data-driven decision making, then scale the process to additional sources and systems.

Incorporate master data management practices and automated pipelines to make the consolidation process repeatable, reliable, and future-proof. Effective data consolidation enables businesses to turn fragmented data into actionable insights, powering marketing, finance, operations, and executive decision-making.

Conclusion

Data consolidation is no longer optional, it’s a strategic imperative. Businesses today generate large volumes of data from multiple sources, and without a structured data consolidation process, this data remains fragmented, inconsistent, and underutilized.

By consolidating data into a centralized repository, maintaining data quality standards, applying data governance, and leveraging modern data consolidation tools, organizations can transform raw, scattered data into relevant data that powers data-driven decision making, improves business operations, and enables actionable business intelligence.

Whether you’re a marketer optimizing campaigns, a finance leader reconciling revenue, or an operations manager forecasting demand, effective data consolidation enables businesses to gain valuable insights, maintain data integrity, and unlock the full potential of their data assets.

Take Action: To simplify your consolidation efforts and access prebuilt dashboards, automated pipelines, and centralized reporting, try ReportDash—a tool designed to help businesses consolidate, analyze, and visualize data from multiple sources in one place.